Hello, so I’ve been having some problems with Reactor, couldn’t figure out why. Now I am thinking that it has something to do with my Zigbee network.

My entire Zigbee network is down. I’ve tried restoring to a backup from about a week ago (I definitely wasn’t having any problems then), but the same problem persists. I have 6 Stelpro SMT402 Zigbee thermostats and 2 Iris Smart Plugs. None of those devices are responding to commands.

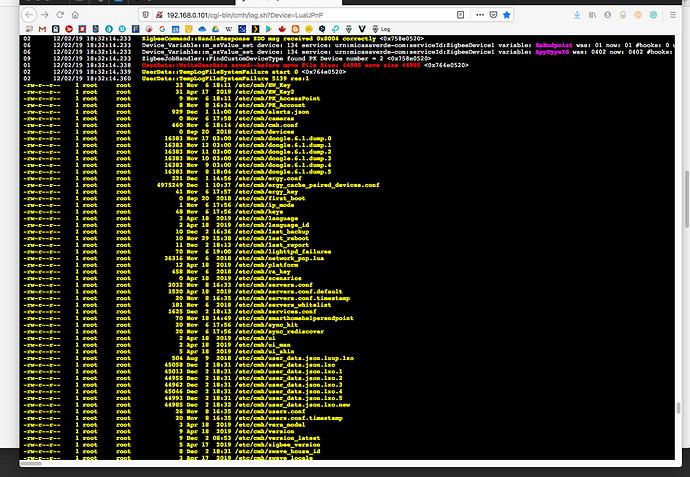

I’m getting this in the log for all of my Zigbee Devices. (Device 126 is a Stelpro Thermostat, but getting the same sort fo logs for all of them)

JobHandler::Run job#34 :zb_polling dev:126 (0x187f4b0) P:100 S:0 Id: 34 is 49.577123000 seconds old <0x7583c520>

02 12/01/19 10:02:01.683 ZBJob_PollNode::Run job#34 :zb_polling dev:126 (0x187f4b0) P:100 S:0 Id: 34 Sending Command <0x7583c520>

02 12/01/19 10:02:06.390 ZigbeeCommand::HandleResponse ACK Delivery Failed with status 0x66 <0x75a3c520>

01 12/01/19 10:02:06.391 ZBJob_PollNode::receiveFrame job#34 :zb_polling dev:126 (0x187f4b0) P:100 S:5 Id: 34 Response Error <0x75a3c520>

02 12/01/19 10:02:06.402 ZBJob_PollNode::Run job#34 :zb_polling dev:126 (0x187f4b0) P:100 S:5 Id: 34 Job finished with errors <0x7583c520>

06 12/01/19 10:02:06.403 Device_Variable::m_szValue_set device: 126 service: urn:micasaverde-com:serviceId:ZigbeeNetwork1 variable: ConsecutivePollFails was: 14 now: 15 #hooks: 0 upnp: 0 skip: 0 v:(nil)/NONE duplicate:0 <0x7583c520>

02 12/01/19 10:02:06.403 Device_Basic::AddPoll 126 poll list full, deleting old one <0x7583c520>

06 12/01/19 10:02:06.404 Device_Variable::m_szValue_set device: 126 service: urn:micasaverde-com:serviceId:HaDevice1 variable: PollRatings was: 4.70 now: 4.60 #hooks: 0 upnp: 0 skip: 0 v:(nil)/NONE duplicate:0 <0x7583c520>

04 12/01/19 10:02:06.405 <0x7583c520>

02 12/01/19 10:02:06.405 JobHandler::PurgeCompletedJobs purge job#34 :zb_polling dev:126 (0x187f4b0) P:100 S:2 Id: 34 zb_polling status 2 <0x7583c520>

| 02 | 12/01/19 10:05:12.101 | ZigbeeJobHandler::ServicePollLoop Adding PollJob for Device 96 <0x7663c520> |

|---|---|---|

| 02 | 12/01/19 10:05:12.102 | ZigbeeJobHandler::ServicePollLoop Adding PollJob for Device 99 <0x7663c520> |

| 02 | 12/01/19 10:05:12.123 | ZigbeeJobHandler::ServicePollLoop Adding PollJob for Device 101 <0x7663c520> |

| 02 | 12/01/19 10:05:12.123 | ZigbeeJobHandler::ServicePollLoop Adding PollJob for Device 104 <0x7663c520> |

| 02 | 12/01/19 10:05:12.124 | ZigbeeJobHandler::ServicePollLoop Adding PollJob for Device 110 <0x7663c520> |

| 02 | 12/01/19 10:05:12.125 | ZigbeeJobHandler::ServicePollLoop Adding PollJob for Device 121 <0x7663c520> |

| 02 | 12/01/19 10:05:12.125 | ZigbeeJobHandler::ServicePollLoop Adding PollJob for Device 126 <0x7663c520> |

| 02 | 12/01/19 10:05:12.126 | ZigbeeJobHandler::ServicePollLoop Adding PollJob for Device 133 <0x7663c520> |

| 02 | 12/01/19 10:05:12.132 | ZBJob_PollNode::Run job#61 :zb_polling dev:96 (0x175b318) P:100 S:0 Id: 61 Sending Command <0x7583c520> |

| 02 | 12/01/19 10:05:16.844 | ZigbeeCommand::HandleResponse ACK Delivery Failed with status 0x66 <0x75a3c520> |

| 01 | 12/01/19 10:05:16.844 | ZBJob_PollNode::receiveFrame job#61 :zb_polling dev:96 (0x175b318) P:100 S:5 Id: 61 Response Error <0x75a3c520> |

| 02 | 12/01/19 10:05:16.856 | ZBJob_PollNode::Run job#61 :zb_polling dev:96 (0x175b318) P:100 S:5 Id: 61 Job finished with errors <0x7583c520> |

| 06 | 12/01/19 10:05:16.856 | Device_Variable::m_szValue_set device: 96 service: urn:micasaverde-com:serviceId:ZigbeeNetwork1 variable: ConsecutivePollFails was: 23 now: 24 #hooks: 0 upnp: 0 skip: 0 v:(nil)/NONE duplicate:0 <0x7583c520> |

| 02 | 12/01/19 10:05:16.857 | Device_Basic::AddPoll 96 poll list full, deleting old one <0x7583c520> |

| 06 | 12/01/19 10:05:16.858 | Device_Variable::m_szValue_set device: 96 service: urn:micasaverde-com:serviceId:HaDevice1 variable: PollRatings was: 4.20 now: 4.10 #hooks: 0 upnp: 0 skip: 0 v:(nil)/NONE duplicate:0 <0x7583c520> |

| 04 | 12/01/19 10:05:16.858 | <0x7583c520> |

| 02 | 12/01/19 10:05:16.859 | JobHandler::PurgeCompletedJobs purge job#61 :zb_polling dev:96 (0x175b318) P:100 S:2 Id: 61 zb_polling status 2 <0x7583c520> |

| 02 | 12/01/19 10:05:16.859 | JobHandler::Run job#62 :zb_polling dev:99 (0x175b508) P:100 S:0 Id: 62 is 4.757592000 seconds old <0x7583c520> |

| 02 | 12/01/19 10:05:16.859 | ZBJob_PollNode::Run job#62 :zb_polling dev:99 (0x175b508) P:100 S:0 Id: 62 Sending Command <0x7583c520> |

| 02 | 12/01/19 10:05:21.570 | ZigbeeCommand::HandleResponse ACK Delivery Failed with status 0x66 <0x75a3c520> |

| 01 | 12/01/19 10:05:21.570 | ZBJob_PollNode::receiveFrame job#62 :zb_polling dev:99 (0x175b508) P:100 S:5 Id: 62 Response Error <0x75a3c520> |

| 02 | 12/01/19 10:05:21.582 | ZBJob_PollNode::Run job#62 :zb_polling dev:99 (0x175b508) P:100 S:5 Id: 62 Job finished with errors <0x7583c520> |

| 06 | 12/01/19 10:05:21.583 | Device_Variable::m_szValue_set device: 99 service: urn:micasaverde-com:serviceId:ZigbeeNetwork1 variable: ConsecutivePollFails was: 26 now: 27 #hooks: 0 upnp: 0 skip: 0 v:(nil)/NONE duplicate:0 <0x7583c520> |

| 02 | 12/01/19 10:05:21.583 | Device_Basic::AddPoll 99 poll list full, deleting old one <0x7583c520> |

| 06 | 12/01/19 10:05:21.584 | Device_Variable::m_szValue_set device: 99 service: urn:micasaverde-com:serviceId:HaDevice1 variable: PollRatings was: 4.20 now: 4.10 #hooks: 0 upnp: 0 skip: 0 v:(nil)/NONE duplicate:0 <0x7583c520> |

| 04 | 12/01/19 10:05:21.585 | <0x7583c520> |

| 02 | 12/01/19 10:05:21.585 | JobHandler::PurgeCompletedJobs purge job#62 :zb_polling dev:99 (0x175b508) P:100 S:2 Id: 62 zb_polling status 2 <0x7583c520> |

| 02 | 12/01/19 10:05:21.585 | JobHandler::Run job#63 :zb_polling dev:101 (0x187f458) P:100 S:0 Id: 63 is 9.463236000 seconds old <0x7583c520> |

| 02 | 12/01/19 10:05:21.586 | ZBJob_PollNode::Run job#63 :zb_polling dev:101 (0x187f458) P:100 S:0 Id: 63 Sending Command <0x7583c520> |

| 02 | 12/01/19 10:05:26.297 | ZigbeeCommand::HandleResponse ACK Delivery Failed with status 0x66 |